06. Quiz: TensorFlow Cross Entropy

Cross Entropy in TensorFlow

As with the softmax function, TensorFlow has a function to do the cross entropy calculations for us.

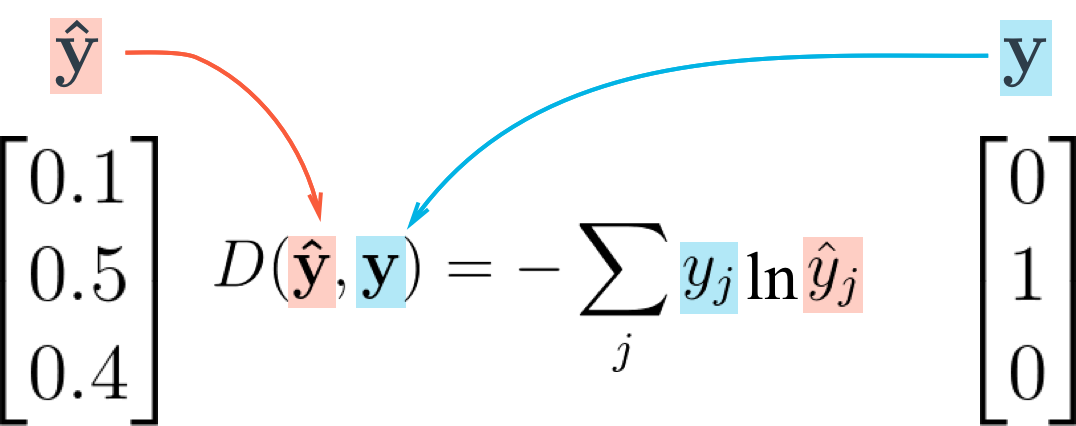

Cross entropy loss function

Let's take what you learned from the video and create a cross entropy function in TensorFlow. To create a cross entropy function in TensorFlow, you'll need to use two new functions:

Reduce Sum

x = tf.reduce_sum([1, 2, 3, 4, 5]) # 15The tf.reduce_sum() function takes an array of numbers and sums them together.

Natural Log

x = tf.log(100.0) # 4.60517This function does exactly what you would expect it to do. tf.log() takes the natural log of a number.

Quiz

Print the cross entropy using softmax_data and one_hot_encod_label.

Start Quiz:

User's Answer:

(Note: The answer done by the user is not guaranteed to be correct)